반응형

바로 시작!

1. 필요한 라이브러리 불러오기

1 2 3 4 5 6 7 | import requests from selenium import webdriver #import re import time from selenium.webdriver.common.by import By import pandas as pd from bs4 import BeautifulSoup | cs |

2. 로그인 하기

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | ##1. 로그인 하기 driver = webdriver.Chrome('C:/Windows/chromedriver.exe')#웹드라이버 생성 url="https://www.instagram.com/" driver.get(url) time.sleep(2) login = driver.find_elements_by_css_selector('input._aa4b._add6._ac4d') email = "아이디" pw = "비밀번호" login[0].send_keys(email) login[1].send_keys(pw) login[1].submit()#로그인 버튼 클릭 time.sleep(2) | cs |

3. 검색할 키워드 입력하기

- url 구조 파악하기

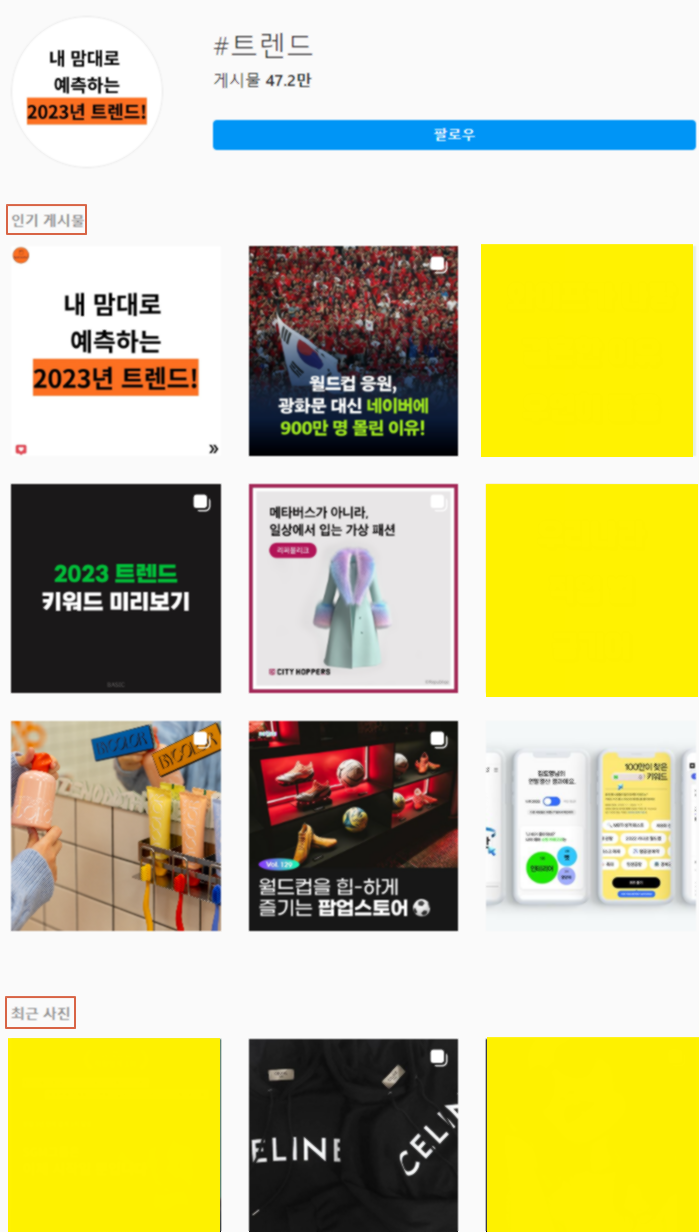

아래 이미지를 보면 검색하고자 하는 해시태그는 url가장 마지막에 추가 되어 있다.

1 2 3 4 5 6 | ##2. 검색할 키워드 입력하기 keyword="트렌드"#키워드 입력 searchurl="https://www.instagram.com/explore/tags/{}/".format(keyword) driver.get(searchurl) time.sleep(2) | cs |

4. 데이터 프레임 만들어 놓기

1 2 3 | ## 3. 데이터 프레임 만들어 놓기 table=pd.DataFrame(columns=['아이디','내용']) | cs |

5. 크롤링 : 최근사진만 크롤링!

인스타그램에 해시태그를 검색하면, 인기 게시물 9개가 나란히 나열 된 후, 최근 게시물이 나열되는 것을 확인할 수 있다.

따라서 10번째 게시물 부터 크롤링 할 예정!

1 2 3 4 5 | ## 4. 최근사진 부터 크롤링 posting = driver.find_elements_by_class_name('_aagw') posting[9].click() time.sleep(2) | cs |

6. BeautifulSoup이용해 원하는 내용 추출 및 데이터 프레임에 저장 : 10개만 추출

- 인스타그램 크롤링은 selenium을 사용해 크롤링 하는것 보다 BeautifulSoup을 사용해 전체 html을 긁어 온 후 원하는 내용만 추출 하는 것을 추천한다. 왜냐면...엘리먼트와 경로가 게시물 마다 다르기 때문이다. 게다가 경로가 너무 더러움...ㅠㅠ

나의 삽질...(이렇게 하지 말자 예시)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | #10개만 크롤링 for i in range(0,10): try : #필요한 내용 크롤링 user= driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div.xb88tzc.xw2csxc.x1odjw0f.x5fp0pe.x1qjc9v5.xjbqb8w.x1lcm9me.x1yr5g0i.xrt01vj.x10y3i5r.x47corl.xh8yej3.x15h9jz8.xr1yuqi.xkrivgy.x4ii5y1.x1gryazu.xir0mxb.x1juhsu6 > div > article > div > div._ae65 > div > div > div._aasi > div > header > div._aaqy._aaqz > div._aar0._ad95._aar1 > div.x78zum5 > div > div > div > div > a').text contents=driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div.xb88tzc.xw2csxc.x1odjw0f.x5fp0pe.x1qjc9v5.xjbqb8w.x1lcm9me.x1yr5g0i.xrt01vj.x10y3i5r.x47corl.xh8yej3.x15h9jz8.xr1yuqi.xkrivgy.x4ii5y1.x1gryazu.xir0mxb.x1juhsu6 > div > article > div > div._ae65 > div > div > div._ae2s._ae3v._ae3w > div._ae5q._ae5r._ae5s > ul > div > li > div > div > div._a9zr > div._a9zs > span').text #like=driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div.xb88tzc.xw2csxc.x1odjw0f.x5fp0pe.x1qjc9v5.xjbqb8w.x1lcm9me.x1yr5g0i.xrt01vj.x10y3i5r.x47corl.xh8yej3.x15h9jz8.xr1yuqi.xkrivgy.x4ii5y1.x1gryazu.xir0mxb.x1juhsu6 > div > article > div > div._ae65 > div > div > div._ae2s._ae3v._ae3w > section._ae5m._ae5n._ae5o > div > div > div > a > div > span').text except : user= driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div.xb88tzc.xw2csxc.x1odjw0f.x5fp0pe.x1qjc9v5.xjbqb8w.x1lcm9me.x1yr5g0i.xrt01vj.x10y3i5r.x47corl.xh8yej3.x15h9jz8.xr1yuqi.xkrivgy.x4ii5y1.x1gryazu.xir0mxb.x1juhsu6 > div > article > div > div._ae65 > div > div > div._ae2s._ae3v._ae3w > div._ae5q._ae5r._ae5s > ul > div > li > div > div > div._a9zr > h2 > div > div > a') contents=driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div.xb88tzc.xw2csxc.x1odjw0f.x5fp0pe.x1qjc9v5.xjbqb8w.x1lcm9me.x1yr5g0i.xrt01vj.x10y3i5r.x47corl.xh8yej3.x15h9jz8.xr1yuqi.xkrivgy.x4ii5y1.x1gryazu.xir0mxb.x1juhsu6 > div > article > div > div._ae65 > div > div > div._ae2s._ae3v._ae3w > div._ae5q._ae5r._ae5s > ul > div > li > div > div > div._a9zr > div._a9zs > span') except : user= driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div.xb88tzc.xw2csxc.x1odjw0f.x5fp0pe.x1qjc9v5.xjbqb8w.x1lcm9me.x1yr5g0i.xrt01vj.x10y3i5r.x47corl.xh8yej3.x15h9jz8.xr1yuqi.xkrivgy.x4ii5y1.x1gryazu.xir0mxb.x1juhsu6 > div > article > div > div._ae65 > div > div > div._ae2s._ae3v._ae3w > div._ae5q._ae5r._ae5s > ul > div > li > div > div > div._a9zr > h2 > div:nth-child(1) > div > a').text contents=driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div.xb88tzc.xw2csxc.x1odjw0f.x5fp0pe.x1qjc9v5.xjbqb8w.x1lcm9me.x1yr5g0i.xrt01vj.x10y3i5r.x47corl.xh8yej3.x15h9jz8.xr1yuqi.xkrivgy.x4ii5y1.x1gryazu.xir0mxb.x1juhsu6 > div > article > div > div._ae65 > div > div > div._ae2s._ae3v._ae3w > div._ae5q._ae5r._ae5s > ul > div > li > div > div > div._a9zr > div._a9zs > span').text else : user= driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div.xb88tzc.xw2csxc.x1odjw0f.x5fp0pe.x1qjc9v5.xjbqb8w.x1lcm9me.x1yr5g0i.xrt01vj.x10y3i5r.x47corl.xh8yej3.x15h9jz8.xr1yuqi.xkrivgy.x4ii5y1.x1gryazu.xir0mxb.x1juhsu6 > div > article > div > div._ae65 > div > div > div._aasi > div > header > div._aaqy._aaqz > div._aar0._ad95._aar1 > div.x78zum5 > div > div > div > div > a').text contents=driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div.xb88tzc.xw2csxc.x1odjw0f.x5fp0pe.x1qjc9v5.xjbqb8w.x1lcm9me.x1yr5g0i.xrt01vj.x10y3i5r.x47corl.xh8yej3.x15h9jz8.xr1yuqi.xkrivgy.x4ii5y1.x1gryazu.xir0mxb.x1juhsu6 > div > article > div > div._ae65 > div > div > div._ae2s._ae3v._ae3w > div._ae5q._ae5r._ae5s > ul > div > li > div > div > div._a9zr > div._a9zs > span').text time.sleep(2) #데이터 프레임에 내용 넣기 table.loc[i]=[user,contents] button=driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div:nth-child(1) > div > div > div._aaqg._aaqh > button') button.click() | cs |

이렇게 하자!

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | for i in range(0,10): html = driver.page_source# html 긁어오기 soup = BeautifulSoup(html,'html.parser') # 원하는 내용만 추출 user=soup.select("a.x1i10hfl.xjbqb8w.x6umtig.x1b1mbwd.xaqea5y.xav7gou.x9f619.x1ypdohk.xt0psk2.xe8uvvx.xdj266r.x11i5rnm.xat24cr.x1mh8g0r.xexx8yu.x4uap5.x18d9i69.xkhd6sd.x16tdsg8.x1hl2dhg.xggy1nq.x1a2a7pz._acan._acao._acat._acaw._aj1-._a6hd") contents = soup.select("span._aacl._aaco._aacu._aacx._aad7._aade") user = user[0].text contents = contents[0].text time.sleep(2) #데이터 프레임에 내용 넣기 table.loc[i]=[user,contents] button=driver.find_element_by_css_selector('#mount_0_0_ZI > div > div > div > div:nth-child(4) > div > div > div.x9f619.x1n2onr6.x1ja2u2z > div > div.x1uvtmcs.x4k7w5x.x1h91t0o.x1beo9mf.xaigb6o.x12ejxvf.x3igimt.xarpa2k.xedcshv.x1lytzrv.x1t2pt76.x7ja8zs.x1n2onr6.x1qrby5j.x1jfb8zj > div > div > div > div > div:nth-child(1) > div > div > div._aaqg._aaqh > button') button.click() | cs |

7. 엑셀로 저장!

1 | table.to_excel("연습.xlsx") | cs |

반응형

'1. 코드 > 1.1 크롤링' 카테고리의 다른 글

| 유튜브, 동영상 목록 크롤링하기 (0) | 2023.03.14 |

|---|---|

| 네이버, 카페 검색 결과 크롤링 하기 (0) | 2022.12.11 |

댓글